End-to-End Deep Learning for Autonomous Driving in AirSim

AirSim is an open source simulator for drones and cars developed by Microsoft.

In this article, we will introduce the tutorial “Autonomous Driving using End-to-End Deep Learning: an AirSim tutorial” using AirSim.

The tutorial aims for autonomous driving like the video (15 and 90 seconds version) below.

AirSim

AirSim is an open source simulator for drones and cars developed by Microsoft AI and Research Group.

Like the previous article’s CARLA, it is based on Unreal Engine 4.

For details of AirSim, please visit the following site.

https://github.com/Microsoft/AirSim

For Windows OS, binaries are also provided.

In this article, we will use the binary linked from the autonomous driving cookbook, not the binary available from GitHub.

The Autonomous Driving Cookbook

The autonomous driving cookbook is published by Microsoft on GitHub.

For details of the autonomous driving cookbook, please visit the following site.

https://github.com/Microsoft/AutonomousDrivingCookbook

The autonomous driving cookbook currently has two tutorials, one of which is “Distributed Deep Reinforcement Learning for Autonomous Driving” introduced in the following article,

Deep Reinforcement Learning for Autonomous Driving in AirSim

another is “Autonomous Driving using End-to-End Deep Learning: an AirSim tutorial” introduced in this article.

Autonomous Driving using End-to-End Deep Learning

Autonomous driving using end-to-end deep learning is a tutorial to estimate the steering angle from the front camera image using end-to-end deep learning. In the tutorial, it is called “hello world” of autonomous driving.

It is a style to execute the following three notebooks on Jupyter Notebook.

Environment Setup

Although we will not explain in detail, we created a virtual environment of Python 3.6 for AirSim using Miniconda of Windows 10, and installed tensorflow-gpu (1.8.0) and keras-gpu (2.1.2) using conda.

Download and unzip the autonomous driving cookbook (https://github.com/Microsoft/AutonomousDrivingCookbook/archive/master.zip), and run InstallPackages.py in the AirSimE2EDeepLearning directory to install the dependent package.

In addition, download the dataset (https://aka.ms/AirSimTutorialDataset, about 3GB) and extract the dataset to the AirSimE2EDeepLearning directory.

Also, download and extract the AirSim simulator (https://airsimtutorialdataset.blob.core.windows.net/e2edl/AD_Cookbook_AirSim.7z, binary for Windows, about 7GB).

The AirSim simulator and the dataset are in 7z format.

DataExplorationAndPreparation

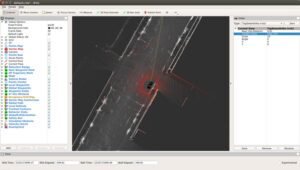

DataExplorationAndPreparation visualizes the dataset in the data_raw directory and generates h5 format train/eval/test dataset in the data_cooked directory.

TrainModel

TrainModel trains and evaluates a model that estimates the steering angle from the front camera image using the train/eval dataset of the data_cooked directory.

Since the number of training epochs is set to 500, the expected training time is displayed as about 10 hours, but because early stopping is also set, training ends on 30-40 epochs (about 45 minutes).

TestModel

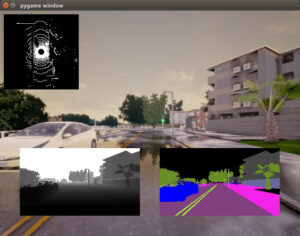

TestModel inputs the front camera image and state (speed) from AirSim launched in the landscape environment and outputs car_controls including steering angle to AirSim.

To launch AirSim, the following PowerShell command runs in the directory which AirSim simulator was extracted.

.\AD_Cookbook_Start_AirSim.ps1 landscape

If you want to launch in a small window (e.g. 640 x 480), the following command can be used.

.\Landscape_Neighborhood\JustAssets.exe landscape -windowed -ResX=640 -ResY=480

Summary

We introduced the tutorial “Autonomous Driving using End-to-End Deep Learning: an AirSim tutorial” using AirSim.